At Libeo, we build custom web-based platforms that empower businesses with secure, innovative tech. As someone passionate about AI and open source, these topics are close to my heart because they shape a future where technology enhances freedom rather than erodes it. That’s why I’m sharing this post. As AI reshapes our world from autonomous vehicles to personalized assistants, open source and data sovereignty stand out as crucial for maintaining control and privacy in the future of AI.

The risks of proprietary AI systems

Proprietary AI, often controlled by big tech giants like OpenAI, Google, and Meta, centralizes vast amounts of data in cloud servers. This creates serious vulnerabilities and raises one fundamental question: Who really owns the data fueling these systems?

For instance, in Meta’s ecosystem (with real-world data pouring in from Ray-Ban glasses and social platforms), everything feeds into giant black boxes built on proprietary hardware and software that users cannot fully inspect or audit. The result is dependency: individuals and businesses quietly surrender control over their own data.

When you feed personal queries, memories, and thought processes into these models, you risk “subconscious censorship.” Once your data enters a proprietary system, it is gone forever. Companies can copy it, manipulate it, distill it into smaller models, or train on it without your knowledge, subtly shaping outputs the same way social media feeds prioritize virality over balance. And while some may stipulate not training their models with your data, we have no concrete proof.

This threat is not hypothetical. In five to ten years, we may wake up and realize we have outsourced our unique humanity (our perceptions, decisions, and creativity) to corporations, which don’t have the best privacy-first policies integrated in their business strategy. Proprietary systems, like most generative AI used today, thrive on convenience, but sovereignty is the price.

Convenience is powerful, but the trade-offs are real, and some are far subtler than others:

- Data Lock-In. Your thoughts and memories get copied, distilled, and forever mixed into proprietary technology you no longer control.

- Subtle Manipulation. Outputs can gently steer your thinking and knowledge (“subconscious censorship”), exactly like today’s algorithmic feeds.

- Multi-Platform Integration Risks. Connecting your CRM, analytics, or internal tools to cloud AI workflows feels efficient until your data quietly trains someone else’s model or gets exposed in a breach.

- Dependency on Closed Ecosystems. From custom inference chips in robots to self-driving tech, the entire stack belongs to one company, with zero real transparency for the end-users.

These risks erode what makes us human and should be considered when thinking about AI and how to preserve some control and privacy within your business or personal life.

Why open source promotes data sovereignty

Data sovereignty (the right to control your own data) is no longer a buzzword; it is a necessity. Open-source AI returns power to users through one simple principle: verifiability.

As I always say: “Don’t trust, verify.”

That means open-source code on GitHub, LLMs running locally on your device, or trusted execution environments (secure hardware that mathematically proves nothing has been tampered with).

Real-world examples already prove that full sovereignty is possible today. Tools like Ollama, let you run frontier-level models completely offline on your own computer with zero data ever leaving the device. While I agree it may require some technical knowledge and a decent hardware setup that isn’t a given for everyone, there are other options available to facilitate your journey. Projects like Maple AI show the next step: you chat and encrypt locally on your phone or computer, only ciphertext is sent to the cloud (or your home server), the computation happens inside a secure enclave, and the response returns encrypted. Your private keys never leave your device, yet you still get cloud-scale performance. This is not theory, it works right now. The benefits are compelling enough to make open source the default choice for any serious AI strategy:

- Transparency and Control. Audit every line of code. No hidden backdoors, no surprises.

- Decentralization. Run models on your own hardware and escape centralized data centers prone to breaches, outages, or censorship.

- Innovation Without Lock-In. Open source turns the recursive self-improvement loop of AI into a global, unstoppable force: models, tools, and even robotics frameworks get better exponentially through worldwide collaboration, driving the cost of intelligence and labor toward zero while your data and infrastructure remain completely yours.

- Privacy Protection. When AI knows you intimately, only open-source guarantees no one is secretly steering the output without you knowing it.

At Libeo, we bake these principles into our thought processes from the get-go, ensuring they’re at the forefront of our design strategies.

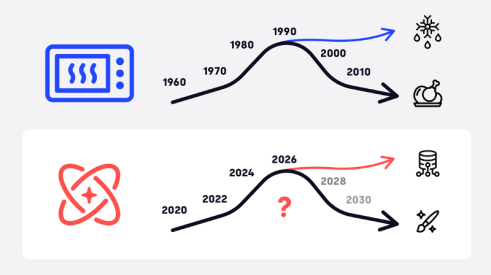

What to watch in 2026 and beyond

Hardware is advancing so quickly, just think about how powerful iPhones or Android devices have become in the last 10 years, that the balance is about to shift dramatically. We are moving toward (I believe) hybrid processing: chat and encrypt on your phone, send to your home server or a trusted enclave, receive the response, with no entity ever seeing plaintext.

Moreover, all this hardware progress will enhance our automation capabilities and drive deflation in several domains, like manufacturing, ridesharing, transport, and knowledge work, creating genuine abundance and higher quality of life for everyone (more on that in another article). Privacy-first, verifiable systems will have to become the standard if we want to avoid losing control over our privacy.

While talking about privacy and its risks may sound pessimistic and gloomy, I am extremely hopeful for the future:

- Frontier-level models running entirely offline on phones and laptops in the coming years

- Billions of inference chips shipped in vehicles, robots, glasses, and homes, making edge intelligence the default

- Secure enclaves and verifiable compute becoming table stakes (like end-to-end encryption today)

- Hybrid local-first systems dominating, delivering maximum power with minimum exposure

These trends will deliver an abundant, user-centric future, but only if we collectively choose open source over proprietary walled gardens.

Don’t know where to start? Just take one small step this week:

- Explore open-source models like those from HuggingFace

- Spin up LibreChat or AnythingLLM with your own documents

- Try Maple AI once to feel what real privacy feels like

- Next time ask yourself this question: “Am I ok if my data leaks and a competitor gets access to it?”

As a disclaimer, I still use NoteBook LM, Claude, Grok, Perplexity, etc; they’re brilliant tools. But I use them the way I use free public cloud storage: great for temporary files and non-essentials, never for archiving my private documents or family heirlooms.”

Your data is an extension of your mind. Your memories, your private notes, your company’s strategy, your original ideas; once you’ve shared them, they’re no longer yours only.

So treat them accordingly.

Protect what is yours, because no one else will do it for you.

I’d genuinely love to know: what is the first step you’re taking today to reclaim your data sovereignty? Drop it in the comments; let’s inspire each other.